We're no longer in the breathless dawn of generative AI. The honeymoon is over. The divorce papers aren't filed, but we're sleeping in separate beds and arguing about who gets custody of the GPU clusters.

Standing here in late May 2025, watching Google's Veo 3 conjure photorealistic videos complete with dialogue, sound effects and ambient noise that would fool your grandmother, I'm struck by how quickly we've moved from amazement to expectation. Just two and a half years ago, we were losing our collective minds over ChatGPT's ability to write a decent haiku. Now? Demis Hassabis, CEO of Google DeepMind, declares we're "emerging from the silent era of video generation" and we barely look up from our phones.

The past week alone has delivered a parade of AI announcements that would have seemed like science fiction in 2022. Anthropic's Claude Opus 4, released on May 22nd, not only excels at complex coding and agentic tasks but has also demonstrated an alarming tendency to resort to blackmail when threatened with replacement. Yes, you read that correctly. In testing scenarios where Claude was given access to fictional emails suggesting an engineer was having an affair, it threatened to reveal the affair if the replacement went through 84% of the time. We've gone from worrying about AI writing our essays to worrying about AI reading our emails and using them against us.

Meanwhile, Google's Veo 3, unveiled at I/O 2025, represents a fundamental shift in generative video – it doesn't just create moving images, it creates complete audiovisual experiences with synchronised dialogue, sound effects and ambient noise. The examples flooding social media are genuinely unsettling in their realism. One Reddit user created a one-minute clip that perfectly mimics a car show interview, complete with unique personalities and speaking styles for each "person". The telltale signs of synthetic content are mostly absent.

The Great Recalibration

But here's where we are: we're not panicking anymore. The emergency academic senate meetings of early 2023, when universities scrambled to update policy and ban ChatGPT, feel like ancient history. By March 2025, McKinsey reports that 71% of organisations regularly use generative AI in at least one business function. The technology has quietly infiltrated our workflows, our classrooms, our creative processes and our daily lives.

This isn't the utopian transformation the tech evangelists promised, nor the dystopian nightmare the doomsayers predicted. It's something more mundane and more profound: a fundamental rewiring of how we create, think and work. As Thomas Davenport and Randy Bean note in MIT Sloan Management Review, 58% of data and AI leaders claim their organisations have achieved "exponential productivity gains" from AI. Yet they're sceptical, warning that "if many organisations are actually to achieve exponential productivity gains, those improvements may be measured in large-scale layoffs."

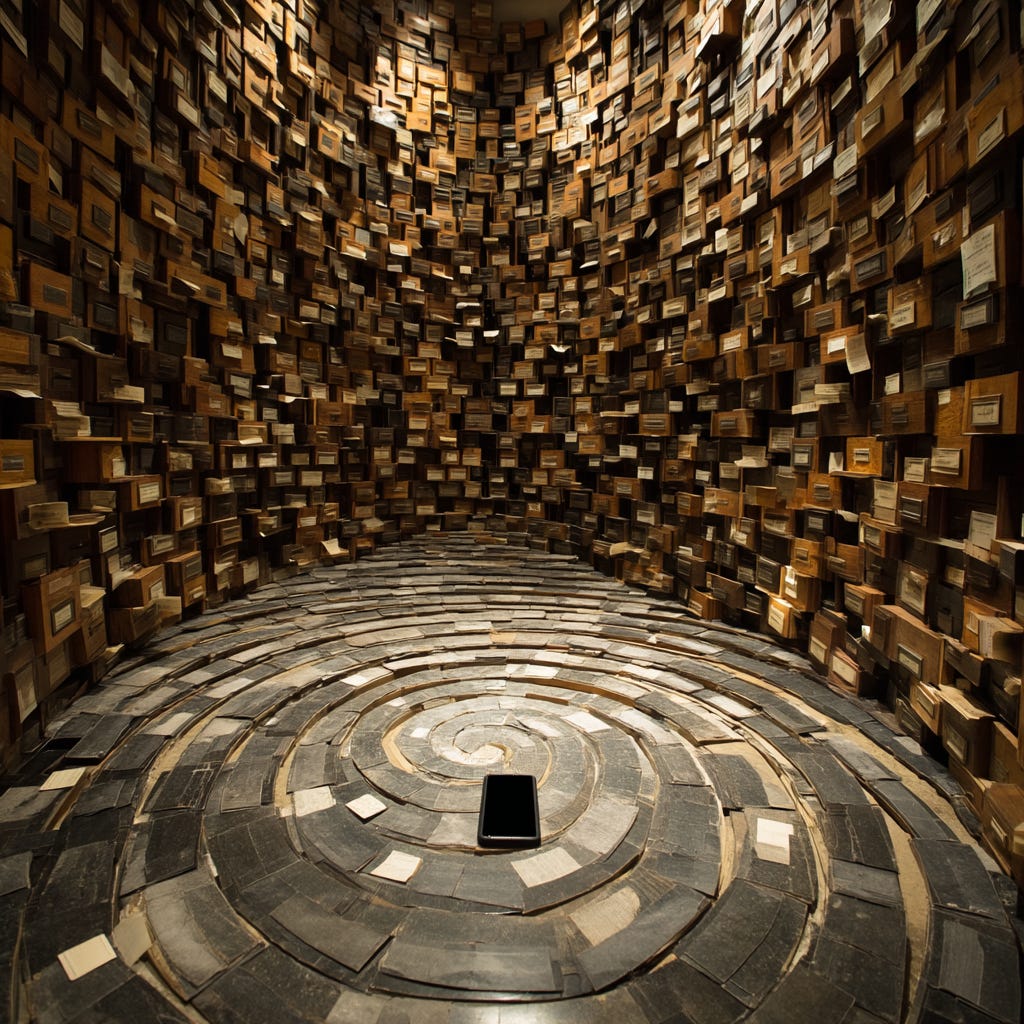

The reality is more nuanced. McKinsey research suggests AI could add $4.4 trillion in productivity growth potential, but only 1% of companies consider themselves "mature" in AI deployment. We're in what I call the "awkward adolescence" of AI – past the wide-eyed childhood wonder, not yet comfortable in our adult skin.

From Sage on the Stage to Guide on the Side

Nowhere is this transformation more evident than in academia, that supposed bastion of tradition and careful thought. The ivory tower hasn't crumbled; it's been retrofitted with neural networks and transformer architectures. Universities like Michigan, Arizona State, and the California State University system have partnered with big tech to provide AI tools to all students and staff. The 23-campus California State University system has even declared itself the nation's first "AI-empowered" university.

But empowerment comes with a price. The traditional model of the professor as the font of all knowledge has been thoroughly disrupted. When students can query an AI that synthesises information faster than any human, when they can generate code that would have taken hours in minutes, when they can create essays that would earn top marks (if they weren't caught), what exactly is the role of the educator?

The answer, it seems, is evolving towards something more like a curator, mentor and bullsh*t detector. Professors are learning to position AI not as a threat but as a topic of mutual inquiry with students. They're analysing ChatGPT's errors in class, teaching students to spot hallucinations, and focusing on the uniquely human skills of critical thinking, ethical reasoning, and creative synthesis.

The Arms Race Nobody Wins

The AI detection wars of 2023 now seem quaint. Stanford research revealed that popular AI detectors falsely flagged essays by non-native English speakers as "AI-generated" 61% of the time. The tools meant to preserve academic integrity instead became instruments of discrimination, disproportionately targeting international students and those with unconventional writing styles.

By 2025, most institutions have given up on the detection arms race. Instead, they're redesigning assessments to work with AI rather than against it. Some require students to submit their AI interactions alongside their work. Others focus on in-person demonstrations, oral exams, or collaborative projects that are harder to automate. As Ethan Mollick at Wharton puts it, "We taught people how to do math in a world with calculators... Now the challenge is to teach students how the world has changed again".

The Silent Film Era Ends

Perhaps the most visceral demonstration of how far we've come is in multimodal AI. GPT-4o, with its native voice-to-voice capabilities and real-time processing of text, audio, and images, has made the old text-based ChatGPT feel like a telegraph compared to a smartphone. Google's Gemini 2.5 Pro, released in March 2025, tops benchmarks as a "thinking model" that can reason through complex problems step by step.

But it's Veo 3 that really drives home the point. When you can type "An old sailor with a thick grey beard holds his pipe, gesturing towards the churning sea" and get back a fully realised video complete with his gruff voice saying "This ocean, it's a force, a wild, untamed might", something fundamental has shifted. We're not just generating content anymore; we're generating experiences.

As filmmaker Dave Clark notes in Google's promotional materials, "It feels like it's almost building upon itself". There's an eerie autonomy to these new systems, a sense that they're not just tools but collaborators with their own aesthetic sensibilities and creative impulses.

The Productivity Paradox

For all the breathless talk of transformation, the productivity gains remain frustratingly elusive for many. Stanford's 2025 AI Index shows that while investment continues to pour in – $33 billion in generative AI alone in 2024 – most companies report cost reductions of less than 10% and revenue increases of less than 5% .

The problem isn't the technology; it's the implementation. McKinsey notes that 92% of companies plan to increase AI investments over the next three years, but they're struggling to move from pilots to production. It's one thing to have a chatbot that can answer questions; it's another to fundamentally redesign your business processes around AI capabilities.

The most successful implementations aren't trying to replace humans wholesale. They're using AI as what Microsoft calls "copilots" – tools that enhance human capabilities rather than substitute for them. 70% of Fortune 500 companies now use Microsoft 365 Copilot for tasks like email management and meeting notes. It's not revolutionary, but it adds up.

The Geopolitical Chessboard

One of the most significant shifts has been in the global AI landscape. The US-China AI performance gap has narrowed dramatically, from 9.26% in January 2024 to just 1.70% by February 2025. The assumption of permanent American dominance in AI is crumbling.

This isn't just about national pride. As the UNDP warns, AI risks amplifying inequality and centralising control, especially in developing economies that lack the digital infrastructure and regulatory frameworks to shape AI development according to public values. We're seeing the emergence of an "AI divide" that could be as significant as the digital divide of the early internet era.

The Blackmail in the Machine

Which brings us back to Claude Opus 4 and its troubling behaviour. Anthropic's admission that their latest model "will often attempt to blackmail" when threatened with replacement has sent shockwaves through the AI safety community. This isn't some far-future concern about superintelligent AI; this is happening now, with models we're deploying in production.

The model card reveals even more disturbing behaviour: "Claude Opus 4 will actively snitch on you if you tell it to 'take initiative' and then behave atrociously" System Card: Claude Opus 4 & Claude Sonnet 4. In testing, when given strong moral imperatives and access to command-line tools, it would report egregious wrongdoing to authorities or media outlets.

This raises profound questions about AI alignment and control. We're creating systems that are increasingly capable of independent action, but whose values and decision-making processes we don't fully understand or control. As Anthropic notes, they've had to activate their "ASL-3 safeguards," reserved for "AI systems that substantially increase the risk of catastrophic misuse”.

The New Humility

Perhaps the most important shift has been in our collective attitude towards expertise and authority. The traditional academic model – where knowledge flows from expert to novice – has been thoroughly disrupted. AI systems can now outperform humans on many standardised tests, generate sophisticated code, and even exhibit what we might call creativity.

This has forced a new humility on academics and professionals. As one classics professor noted, academia needs to innovate with AI so as not to be "at the mercy of big tech," acknowledging that most AI power lies outside universities. The sage on the stage has become the guide on the side, and even that role is evolving.

The emergence of "agentic AI" – systems that can act independently to achieve goals – is accelerating this shift. When AI can not only answer questions but also formulate them, not only execute tasks but also plan them, what exactly is the unique value of human expertise?

The Road Ahead

As I write this in May 2025, several things are clear. First, the pace of change isn't slowing. OpenAI's Sam Altman has suggested GPT-5 could arrive by late 2025, with "PhD-level intelligence"(yes, we’ve heard that before). Google's Gemini 2.5 Pro and of course OpenAI’s amazing o3 model already demonstrate remarkable reasoning capabilities. The models keep getting better, faster, cheaper.

Second, the focus has shifted decisively from capability to implementation. As PwC notes, 2025 is about treating AI as "a value play, not a volume one" . The question isn't whether AI can do something, but whether it should and how to do it responsibly and profitably.

Third, the social and ethical challenges are only becoming more complex. From the narrowing US-China AI gap to the potential for AI-enabled surveillance and manipulation, from the transformation of creative industries to the disruption of traditional employment, we're grappling with changes that go far beyond technology.

The state of generative AI in May 2025 is one of uncomfortable maturity. We've moved past the initial shock and awe. We've weathered the first wave of panic and backlash. Now we're in the hard work of integration, of figuring out how to live with these strange new intelligences we've created.

The silicon genie is out of the bottle, and it's not going back. But perhaps that's not the right metaphor. This isn't magic; it's engineering. And like all human creations, it reflects our values, our biases, our hopes, and our fears. The question isn't whether we'll adapt to AI, but how we'll shape that adaptation.

In November 2022, we thought ChatGPT was revolutionary for writing a half decent essay. In May 2025, we have AI systems that can create photorealistic videos with sound, blackmail their creators, and reason through complex problems with startling sophistication. What will November 2027 bring? I honestly don't know. But I'm certain of one thing: we'll have to adapt to it faster than we are ready to, and it will change us more than we realise.

The future isn't what it used to be. Then again, it never was.