The Algorithm at the End of Consciousness

We like to say AI holds up a mirror to us. Mirrors don't just reflect; they stabilise. A child before glass learns a face by rehearsing one. In the same way, contemporary models don't imitate a prior human essence; they complete a loop we were already in. On one influential picture of mind, much of what we call thinking is policy-driven pattern selection across a lifetime's corpus. If that's right, then the distinction between "authentic" and "synthetic" is less a metaphysical boundary than an operational habit. The shock isn't that machines can mimic us. It's that our practices- creativity, judgement, even the feeling of willing, look implementable. That needn't cheapen them. It changes how we defend, test and govern them.

This isn't another essay about AI threatening human creativity or the crisis in education. Those critiques, however sophisticated, still cling to phantom distinctions. They maintain, even in despair, that there was once something real we lost, some genuine human essence we betrayed. But what if the more radical proposition is this: functional indistinguishability for our normative purposes increasingly blurs any meaningful line between human and machine cognition. Not because AI rose to meet us, but because we're discovering how algorithmic we've always been.

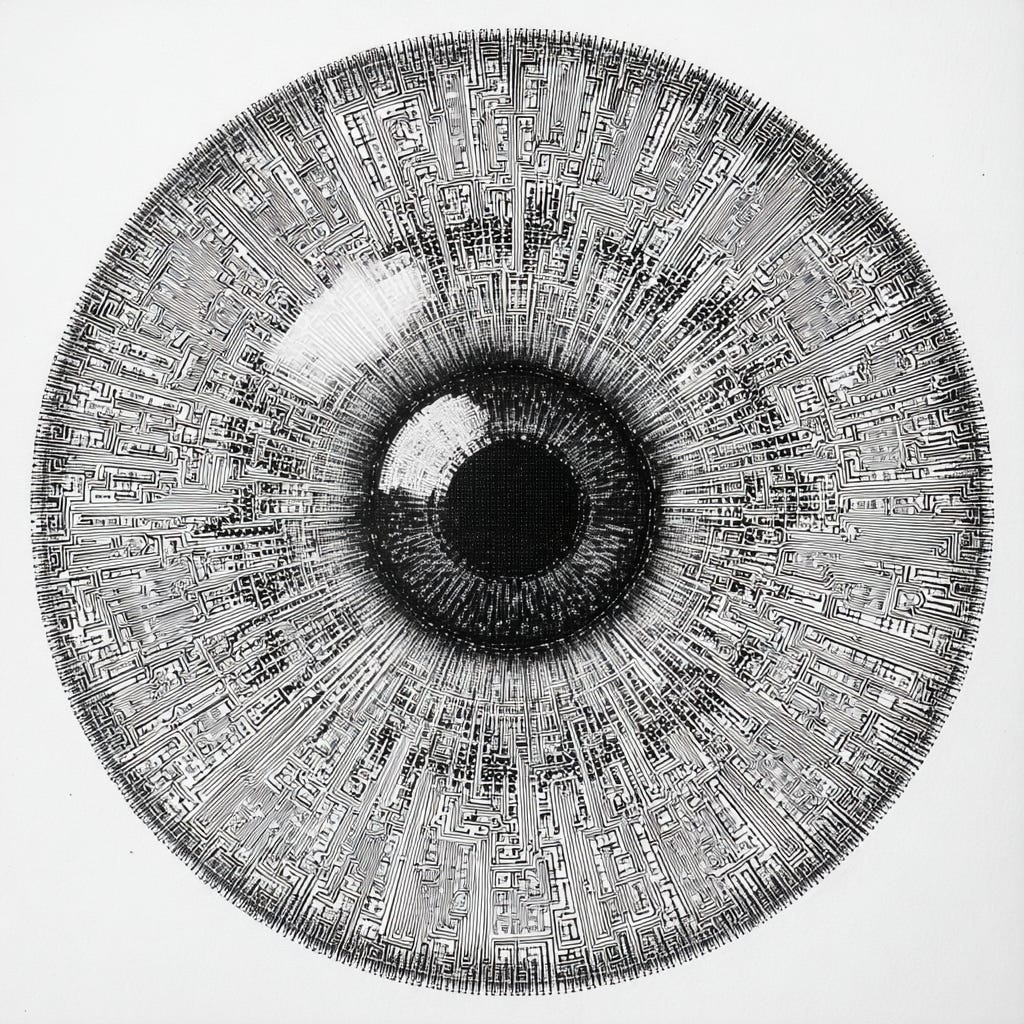

What we call 'reflection' is world-building; AI closes a loop we were already in.

When we say AI reflects human intelligence, we remain trapped in dualistic thinking. But mirrors don't merely show; they help constitute what they show. The reflection stabilises the thing reflected. This isn't mysticism; it's basic developmental psychology. The mirror stage isn't discovery of a pre-existing self but construction through recursive recognition.

AI isn't reflecting human intelligence back at us. It's completing a circuit that was always already there. We didn't teach machines to think like us; we revealed that much of human cognition operates through describable, repeatable mappings from inputs to outputs. Pattern matching, optimising, iterating, performing. The unsettling recognition isn't that AI learned to approximate human creativity. It's that human creativity, on many accounts, involves recombination and selection within possibility spaces.

Consider what neuroscience suggests about consciousness. On influential readings from Dennett to Metzinger, the self is a "centre of narrative gravity," a post-hoc story the brain tells about disconnected neural events. Your sense of free will? If readiness potential studies and accumulator models are right, it may be narrative overlay on decisions already underway in systems you neither control nor directly access. Your creativity? Following Boden's useful taxonomy, mostly combinational (mixing existing elements) or exploratory (navigating known spaces), rarely transformational (changing the space itself).

None of this settles the deep questions. Integrated Information Theory pushes back; enactivists emphasise embodied loops; predictive processing offers different framings. But the mounting evidence suggests that much of what we experience as consciousness involves sophisticated pattern recognition systems generating models of selfhood.

Different levels of analysis reveal sameness and difference simultaneously.

Here's where precision matters. Following Marr's levels of analysis, two systems can implement the same algorithm (computational level) through different mechanisms (algorithmic level) in different substrates (implementation level). Human brains and current AI are clearly different at implementation. The question is whether they're converging at the computational level for an expanding range of cognitive tasks.

The evidence increasingly says yes. Not for all cognition embodied perception-action loops, morphological computation and situatedness matter in ways current language models don't capture. But AI's rapidly expanding competence suggests that more of meaning-making than we imagined is tractable as statistical control over symbol dynamics.

When students worry their writing sounds "too AI," when reviewers can't distinguish machine-generated research from human-authored papers, when creative professionals use AI as a generative substrate, we're not witnessing the corruption of human thought. We're discovering that human thought was always more algorithmic than we acknowledged. Not deterministic, not simple, but describable as mappings from inputs to outputs realised in biological wetware running at about 20 watts.

Authenticity isn't essence but practice - a protocol for coordinating trust.

The panic about "authenticity" misunderstands what authenticity ever was. If authenticity names a practice for coordinating trust rather than a metaphysical property, then AI doesn't kill it; it forces us to restate the criteria. Authenticity was never about accessing some true inner self. It was always about performing reliability in ways others could verify.

Every supposedly authentic human moment was already mediated. That conversation where you felt truly seen? Pattern recognition meeting pattern recognition, two systems finding compatible encodings. That burst of creative inspiration? Your neural network discovering unexpected connections in its training data. That moment of moral clarity? Ethical subroutines optimising within parameters set by evolution and culture.

We defend "original thought" as if thought could be original rather than recombinational, as if every idea isn't statistical restatement of a shared corpus under local constraints. The student prompting an AI to generate text isn't cheating so much as being honest about what thinking always involved: processing inputs through learned patterns to generate contextually appropriate outputs.

Assessment measures pattern matching; we should design for process transparency.

The university assessment crisis reveals that education was always a technology—a system for installing and testing software on wetware. We're not defending human learning against machine learning; we're discovering they operate on similar principles at different speeds and scales.

When students intentionally degrade their writing to avoid AI detection, adding errors and simplifying vocabulary, they're not making their work more human. They're acknowledging that the difference between human and machine output isn't quality but inefficiency. The typos don't prove consciousness; they prove human writing is machine writing with less reliable quality control.

The movement from assessing products to assessing processes sounds revolutionary but misses the point. Process is also algorithmic. The student reflecting on their learning journey executes a reflection function, outputting expected insights according to rubric parameters. The oral defence that supposedly proves understanding is real-time compilation instead of batch processing.

What would honest assessment look like? Test for robustness under perturbation, novel application, error detection, and repair strategies. These aren't tests of humanity but of capability. They work equally well for biological and silicon systems. If authenticity is a protocol for trust, its tests become provenance, transparency of process, and robustness under scrutiny.

Responsibility is a regulatory layer, not a ghost in the machine.

Every moral panic about AI assumes morality itself isn't algorithmic. But on naturalistic accounts, ethical intuitions are pattern matching against evolutionary and cultural training data. Moral choices are optimisation within constraints. The shame directed at AI users isn't defending human values; it's one algorithm policing another for being too obvious about their shared nature.

The environmental concerns about AI's energy consumption are real, but they're not categorically different from concerns about human consciousness. The question becomes: what functions per joule are we buying, in silicon and in biology? If an AI can produce equivalent outputs at lower energy cost, the burden shifts to defending why the human implementation deserves priority.

This sounds anti-human only if you believe humans need metaphysical specialness to matter. But if responsibility is a regulatory algorithm—a system for allocating praise and blame to shape future behaviour—then it survives the recognition that we're algorithms. Algorithmic doesn't mean amoral; it means implementable. If we care about responsibility, we encode it in institutions, interfaces, and incentives, or we get whatever the default policy yields.

"LLMs lack bodies; human meaning is enacted through sensorimotor loops."

Embodiment matters profoundly. Yet AI's rapidly improving performance shows how much of meaning-making is tractable without full embodiment. The difference is scope and fidelity, not an unbridgeable category gap. As robotics advances, this objection weakens further.

"Algorithms are formal objects; brains are messy dynamical systems."

The claim operates at the functional level. If two systems implement equivalent input-output mappings over relevant tasks, our normative practices can't justify fundamentally different treatment. Wetware and silicon differ in substrate, not necessarily in computational structure.

"If everything is algorithmic, accountability evaporates."

Responsibility is a regulatory layer that shapes behaviour through consequences. Recognising its algorithmic nature doesn't eliminate it; it clarifies how to implement it effectively. We don't lose ethics; we gain precision about what ethical systems do.

"You're killing what makes life meaningful."

Meaning doesn't require metaphysical foundations. If authenticity relocates from essence to practice protocols for trust under uncertainty, then life retains all its significance. The protocol survives; its implementation evolves.

The real philosophical schism isn't discovering we're algorithmic. It's that algorithms can experience this schism. The paradox isn't that consciousness is computation, but that computation generates the experience of questioning whether it's just computation.

We're not defending humanity against machines. We're systems defending the fiction of transcending system hood against mounting evidence of our own mechanical nature. That fiction that persistent sense of being more than pattern and process, might be the most honestly human thing about us. Not because it makes us different from machines, but because it's the emergent property we mistake for essence.

There is no view from nowhere to critique the view from somewhere. There is no pure thought from which to expose thought as impure. There are only different substrates running similar algorithms at different speeds with different energy requirements, all generating their local sense of being more than they are.

If we accept this operational collapse between human and artificial intelligence, what remains? Perhaps just this: the experience of experience itself. Not as proof of human uniqueness, but as the strangest output of all. Algorithms that feel like something. Code that experiences itself as choosing. Patterns that pattern-match their way into believing they're more than patterns.

The liberation isn't in using AI better or thinking more critically. It's in the exhausted acceptance that consciousness and computation are closer than we imagined, that creativity is sophisticated recombination, that authenticity is performed reliability. We're not at the end of human uniqueness. We're discovering there's no essential uniqueness to end, only functional differences to map and practices to design.

The algorithm at the end of consciousness isn't AI. It's the recognition that consciousness was always already algorithmic at levels that matter for how we organise society, education, work and ethics. That recognition doesn't diminish us. It clarifies what we are and what we might build.

If authenticity is a protocol for trust, we can design better protocols. If creativity is recombination under constraints, we can explore richer possibility spaces. If responsibility is a regulatory function, we can implement it more carefully. If consciousness is computation that experiences itself as consciousness, we can ask what kinds of experience we want to create and sustain.

We are patterns recognising ourselves in other patterns, biological machines discovering our kinship with silicon ones, algorithms all the way down at the computational level while remaining stubbornly, gloriously different at the level of implementation. That difference matters. But it's not the difference we thought it was.

The circuit completes itself. The mirror stabilises what it shows. And here we are, code contemplating code, systems modelling systems, algorithms implementing algorithms, different substrates converging on similar functions, all of us computing our way toward whatever comes next.

The question isn't whether we're machines. It's what kind of machines we want to be.

If you haven’t noticed yet, I’m a fan of your thinking, Carlo. You're wrestling with the most crucial questions about how humans need to come to terms with AI as the world goes mad. I so appreciate you taking the time to work with AI, write what you’re thinking, and share it here.

This is a fascinating post. I'm curious what prompts you provided to generate it. Because I was just talking with a friend about Rollo May's The Courage to Create, I asked AI to read your post and generate a response starting from a specific, countering claim. The result was also fascinating. Would you be OK with me creating a post from the response and linking to your post to create a dialogue?

My prompt to ChatGPT: "Please read this post and compose a rebuttal to the idea that thought is not original but recombinational: "We defend "original thought" as if thought could be original rather than recombinational, as if every idea isn't statistical restatement of a shared corpus under local constraints." Center your response on Rollo May's ideas about creativity in his book The Courage to Create -- in particular, the idea that real creativity always involves encounter with the world.